You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

nivek

As Above So Below

British Robot Jailed by Egyptian Border Patrol For Allegedly Being a Spy

A good sign that robots are becoming more human-like is the news that a robotic artist was invited to a contemporary art show in Egypt to display ‘her’ sculpture and answer questions using her AI-controlled voice. A better sign that robots are becoming more human-like is the news that border patrol agents in Egypt arrested the robot and detained her in a human jail for ten days on suspicion that she was a spy and would be using her AI eyes to steal Egyptian secrets. Robots in jail? What dystopian movie have we awakened in?

Artnet News says Ai-Da’s statue is based in part on her interpretation of the above riddle of the sphinx and a self-portrait of her with three legs – a genetic alteration that might extend the human lifespan. Meller explains why Ai-Da ‘thinks’ this is going to happen:

A good sign that robots are becoming more human-like is the news that a robotic artist was invited to a contemporary art show in Egypt to display ‘her’ sculpture and answer questions using her AI-controlled voice. A better sign that robots are becoming more human-like is the news that border patrol agents in Egypt arrested the robot and detained her in a human jail for ten days on suspicion that she was a spy and would be using her AI eyes to steal Egyptian secrets. Robots in jail? What dystopian movie have we awakened in?

Aidan Meller is the art director at the Aidan Meller Galleries in the UK and the human responsible for getting Ai-Da to a recent sculpture exhibition taking place at the Great Pyramid of Giza. Ai-Da (large collection of her photos here) is named both for computing pioneer Ada Lovelace and the artificial intelligence (AI) which directs her camera-equipped eyes and robotic arm to create drawings based on what she ‘sees’ and how she interprets the view, making her the “world’s first ultra-realistic artist” according to Meller’s website. Unfortunately, security officials at the Egyptian Customs Office were not interested in Ai-Da’s art – just her spying eyes.The British ambassador has been working through the night to get Ai-Da released, but we’re right up to the wire now. It’s really stressful.

The London Times reports that the customs officials wanted to remove Ai-Da’s eyes and disable her spying ability. However, they don’t really say what they think she’d be spying on at the sculpture exhibition that can’t already be seen by guests and tourists with cellphone. In an interview with The Guardian, Meller vented his frustration.I can’t take her eyes out. They are integral [to making her art]. She would also look weird without them.

A robot who appreciates irony? Now THAT’S scary — most humans don’t appreciate, much less understand, irony … including her jailers. Ai-Da was powered down and detained, along with her sculpture, for ten days until the British ambassador to Egypt negotiated the release of the robot and her robotic eyes. She and the sculpture are now on display at “Forever Is Now”, an exhibition organized with the assistance of the Egyptian ministry of antiquities and tourism and the Egyptian ministry of foreign affairs.Let’s be really clear about this. She is not a spy. People fear robots, I understand that. But the whole situation is ironic, because the goal of Ai-Da was to highlight and warn of the abuse of technological development, and she’s being held because she is technology. Ai-Da would appreciate that irony, I think.

Which hand is better for sculpting?What goes on four feet in the morning, two feet at noon, and three feet in the evening? (Answer: a person, who crawls on their hands and knees as a baby, and must often use a cane in old age.)

Artnet News says Ai-Da’s statue is based in part on her interpretation of the above riddle of the sphinx and a self-portrait of her with three legs – a genetic alteration that might extend the human lifespan. Meller explains why Ai-Da ‘thinks’ this is going to happen:

Another example of irony – a robot with an unlimited lifespan commenting artistically on the desire of humanity to achieve immortality. Perhaps Egypt is not afraid that Ai-Da is a spy – it’s afraid she will make its people think. How ironic.We’re saying that actually, with the new Crispr technology coming through, and the way we can do gene-editing today, life extension is actually very likely. The ancient Egyptians were doing exactly the same thing with mummification. Humans haven’t changed: we still have the desire to live forever.

nivek

As Above So Below

Robot artist is freed from Egyptian customs after spending 10 days in jail because border agents feared the British-made AI was part of a SPY plot

(Excerpt)

Created by engineers in Leeds two years ago, Ai-Da’s robotic hand calculates a virtual path based on what it sees in front of it and interprets coordinates to create a work of art. She is said to be the world’s first ultra-realistic robot capable of drawing people from life. And her paintings have caused a stir with exhibitions at the V&A, the London Design Festival, the Design Museum and Tate Modern.

Speaking about the exhibition, Mr Meller said: ‘It is the first time in 4,000 years that contemporary art has been allowed so close to the pyramids. ‘We put in eye-watering amounts of time and energy to create the innovations for Ai-Da to put her hands in clay to enable her to create a huge sculpture.’

Mr Meller, who owns an art gallery in Oxford, added: ‘She is very much a machine. She is switched off. ‘But I hope they [the guards] don’t knock her. She is the most sophisticated ultra-realistic robot in the world.’

He thanked the British embassy in Cairo for ‘the amazing work they are putting in to get Ai-Da released’.

Nadine Abdel Ghaffar, founder of Art D’Egypt, which has organised the exhibition, said: ‘The pyramids have a long, illustrious history that has fascinated and inspired artists from all over the world. ‘I’m thrilled to share what will be an unforgettable encounter with the union of art, history and heritage.’

(Excerpt)

Created by engineers in Leeds two years ago, Ai-Da’s robotic hand calculates a virtual path based on what it sees in front of it and interprets coordinates to create a work of art. She is said to be the world’s first ultra-realistic robot capable of drawing people from life. And her paintings have caused a stir with exhibitions at the V&A, the London Design Festival, the Design Museum and Tate Modern.

Speaking about the exhibition, Mr Meller said: ‘It is the first time in 4,000 years that contemporary art has been allowed so close to the pyramids. ‘We put in eye-watering amounts of time and energy to create the innovations for Ai-Da to put her hands in clay to enable her to create a huge sculpture.’

Mr Meller, who owns an art gallery in Oxford, added: ‘She is very much a machine. She is switched off. ‘But I hope they [the guards] don’t knock her. She is the most sophisticated ultra-realistic robot in the world.’

He thanked the British embassy in Cairo for ‘the amazing work they are putting in to get Ai-Da released’.

Nadine Abdel Ghaffar, founder of Art D’Egypt, which has organised the exhibition, said: ‘The pyramids have a long, illustrious history that has fascinated and inspired artists from all over the world. ‘I’m thrilled to share what will be an unforgettable encounter with the union of art, history and heritage.’

SOUL-DRIFTER

Life Long Researcher

One wonders as to how long it will be before artificial humans are created.

Androids almost indistinguishable from real humans.

It is coming.

Just a matter of when.

Androids almost indistinguishable from real humans.

It is coming.

Just a matter of when.

nivek

As Above So Below

Could labour shortages and vaccine mandates usher in a robot revolution and full expansion into the workplace?...

...

America is hiring a record number of robots

Companies in North America added a record number of robots in the first nine months of this year as they rushed to speed up assembly lines and struggled to add human workers.

Factories and other industrial users ordered 29,000 robots, 37% more than during the same period last year, valued at $1.48 billion, according to data compiled by the industry group the Association for Advancing Automation. That surpassed the previous peak set in the same time period in 2017, before the global pandemic upended economies.

The rush to add robots is part of a larger upswing in investment as companies seek to keep up with strong demand, which in some cases has contributed to shortages of key goods. At the same time, many firms have struggled to lure back workers displaced by the pandemic and view robots as an alternative to adding human muscle on their assembly lines.

"Businesses just can't find the people they need - that's why they're racing to automate," said Jeff Burnstein, president of the Association for Advancing Automation, known as A3.

Robots also continue to push into more corners of the economy. Auto companies have long bought most industrial robots. But in 2020, combined sales to other types of businesses surpassed the auto sector for the first time - and that trend continued this year. In the first nine months of the year, auto-related orders for robots grew 20% to 12,544 units, according to A3, while orders by non-automotive companies expanded 53% to 16,355.

.

...

America is hiring a record number of robots

Companies in North America added a record number of robots in the first nine months of this year as they rushed to speed up assembly lines and struggled to add human workers.

Factories and other industrial users ordered 29,000 robots, 37% more than during the same period last year, valued at $1.48 billion, according to data compiled by the industry group the Association for Advancing Automation. That surpassed the previous peak set in the same time period in 2017, before the global pandemic upended economies.

The rush to add robots is part of a larger upswing in investment as companies seek to keep up with strong demand, which in some cases has contributed to shortages of key goods. At the same time, many firms have struggled to lure back workers displaced by the pandemic and view robots as an alternative to adding human muscle on their assembly lines.

"Businesses just can't find the people they need - that's why they're racing to automate," said Jeff Burnstein, president of the Association for Advancing Automation, known as A3.

Robots also continue to push into more corners of the economy. Auto companies have long bought most industrial robots. But in 2020, combined sales to other types of businesses surpassed the auto sector for the first time - and that trend continued this year. In the first nine months of the year, auto-related orders for robots grew 20% to 12,544 units, according to A3, while orders by non-automotive companies expanded 53% to 16,355.

.

nivek

As Above So Below

World's first living robots can now reproduce, scientists say

The US scientists who created the first living robots say the life forms, known as xenobots, can now reproduce -- and in a way not seen in plants and animals. Formed from the stem cells of the African clawed frog (Xenopus laevis) from which it takes its name, xenobots are less than a millimeter (0.04 inches) wide. The tiny blobs were first unveiled in 2020 after experiments showed that they could move, work together in groups and self-heal.

Now the scientists that developed them at the University of Vermont, Tufts University and Harvard University's Wyss Institute for Biologically Inspired Engineering said they have discovered an entirely new form of biological reproduction different from any animal or plant known to science.

"I was astounded by it," said Michael Levin, a professor of biology and director of the Allen Discovery Center at Tufts University who was co-lead author of the new research. "Frogs have a way of reproducing that they normally use but when you ... liberate (the cells) from the rest of the embryo and you give them a chance to figure out how to be in a new environment, not only do they figure out a new way to move, but they also figure out apparently a new way to reproduce."

Stem cells are unspecialized cells that have the ability to develop into different cell types. To make the xenobots, the researchers scraped living stem cells from frog embryos and left them to incubate. There's no manipulation of genes involved.

"Most people think of robots as made of metals and ceramics but it's not so much what a robot is made from but what it does, which is act on its own on behalf of people," said Josh Bongard, a computer science professor and robotics expert at the University of Vermont and lead author of the study. "In that way it's a robot but it's also clearly an organism made from genetically unmodified frog cell."

Bongard said they found that the xenobots, which were initially sphere-shaped and made from around 3,000 cells, could replicate. But it happened rarely and only in specific circumstances. The xenobots used "kinetic replication" -- a process that is known to occur at the molecular level but has never been observed before at the scale of whole cells or organisms, Bongard said.

With the help of artificial intelligence, the researchers then tested billions of body shapes to make the xenobots more effective at this type of replication. The supercomputer came up with a C-shape that resembled Pac-Man, the 1980s video game. They found it was able to find tiny stem cells in a petri dish, gather hundreds of them inside its mouth, and a few days later the bundle of cells became new xenobots.

"The AI didn't program these machines in the way we usually think about writing code. It shaped and sculpted and came up with this Pac-Man shape," Bongard said. "The shape is, in essence, the program. The shape influences how the xenobots behave to amplify this incredibly surprising process."

The xenobots are very early technology -- think of a 1940s computer -- and don't yet have any practical applications. However, this combination of molecular biology and artificial intelligence could potentially be used in a host of tasks in the body and the environment, according to the researchers. This may include things like collecting microplastics in the oceans, inspecting root systems and regenerative medicine.

While the prospect of self-replicating biotechnology could spark concern, the researchers said that the living machines were entirely contained in a lab and easily extinguished, as they are biodegradable and regulated by ethics experts.

The research was partially funded by the Defense Advanced Research Projects Agency, a federal agency that oversees the development of technology for military use.

"There are many things that are possible if we take advantage of this kind of plasticity and ability of cells to solve problems," Bongard said. The study was published in the peer-reviewed scientific journal PNAS on Monday.

.

The US scientists who created the first living robots say the life forms, known as xenobots, can now reproduce -- and in a way not seen in plants and animals. Formed from the stem cells of the African clawed frog (Xenopus laevis) from which it takes its name, xenobots are less than a millimeter (0.04 inches) wide. The tiny blobs were first unveiled in 2020 after experiments showed that they could move, work together in groups and self-heal.

Now the scientists that developed them at the University of Vermont, Tufts University and Harvard University's Wyss Institute for Biologically Inspired Engineering said they have discovered an entirely new form of biological reproduction different from any animal or plant known to science.

"I was astounded by it," said Michael Levin, a professor of biology and director of the Allen Discovery Center at Tufts University who was co-lead author of the new research. "Frogs have a way of reproducing that they normally use but when you ... liberate (the cells) from the rest of the embryo and you give them a chance to figure out how to be in a new environment, not only do they figure out a new way to move, but they also figure out apparently a new way to reproduce."

Stem cells are unspecialized cells that have the ability to develop into different cell types. To make the xenobots, the researchers scraped living stem cells from frog embryos and left them to incubate. There's no manipulation of genes involved.

"Most people think of robots as made of metals and ceramics but it's not so much what a robot is made from but what it does, which is act on its own on behalf of people," said Josh Bongard, a computer science professor and robotics expert at the University of Vermont and lead author of the study. "In that way it's a robot but it's also clearly an organism made from genetically unmodified frog cell."

Bongard said they found that the xenobots, which were initially sphere-shaped and made from around 3,000 cells, could replicate. But it happened rarely and only in specific circumstances. The xenobots used "kinetic replication" -- a process that is known to occur at the molecular level but has never been observed before at the scale of whole cells or organisms, Bongard said.

With the help of artificial intelligence, the researchers then tested billions of body shapes to make the xenobots more effective at this type of replication. The supercomputer came up with a C-shape that resembled Pac-Man, the 1980s video game. They found it was able to find tiny stem cells in a petri dish, gather hundreds of them inside its mouth, and a few days later the bundle of cells became new xenobots.

"The AI didn't program these machines in the way we usually think about writing code. It shaped and sculpted and came up with this Pac-Man shape," Bongard said. "The shape is, in essence, the program. The shape influences how the xenobots behave to amplify this incredibly surprising process."

The xenobots are very early technology -- think of a 1940s computer -- and don't yet have any practical applications. However, this combination of molecular biology and artificial intelligence could potentially be used in a host of tasks in the body and the environment, according to the researchers. This may include things like collecting microplastics in the oceans, inspecting root systems and regenerative medicine.

While the prospect of self-replicating biotechnology could spark concern, the researchers said that the living machines were entirely contained in a lab and easily extinguished, as they are biodegradable and regulated by ethics experts.

The research was partially funded by the Defense Advanced Research Projects Agency, a federal agency that oversees the development of technology for military use.

"There are many things that are possible if we take advantage of this kind of plasticity and ability of cells to solve problems," Bongard said. The study was published in the peer-reviewed scientific journal PNAS on Monday.

.

nivek

As Above So Below

Nations are spending billions on 'killer robot' research

The United Nations recently failed to agree on an outright ban of autonomous weapon systems. James Dawes, a professor of English at Macalester College, takes a look at the current situation and the possible future we face if nothing is done to stop the development of 'killer robots'.

Autonomous weapon systems - commonly known as killer robots - may have killed human beings for the first time ever last year, according to a recent United Nations Security Council report on the Libyan civil war. History could well identify this as the starting point of the next major arms race, one that has the potential to be humanity's final one.

The United Nations Convention on Certain Conventional Weapons debated the question of banning autonomous weapons at its once-every-five-years review meeting in Geneva Dec. 13-17, 2021, but didn't reach consensus on a ban. Established in 1983, the convention has been updated regularly to restrict some of the world's cruelest conventional weapons, including land mines, booby traps and incendiary weapons.

Autonomous weapon systems are robots with lethal weapons that can operate independently, selecting and attacking targets without a human weighing in on those decisions. Militaries around the world are investing heavily in autonomous weapons research and development. The U.S. alone budgeted US$18 billion for autonomous weapons between 2016 and 2020.

Meanwhile, human rights and humanitarian organizations are racing to establish regulations and prohibitions on such weapons development. Without such checks, foreign policy experts warn that disruptive autonomous weapons technologies will dangerously destabilize current nuclear strategies, both because they could radically change perceptions of strategic dominance, increasing the risk of preemptive attacks, and because they could be combined with chemical, biological, radiological and nuclear weapons themselves.

As a specialist in human rights with a focus on the weaponization of artificial intelligence, I find that autonomous weapons make the unsteady balances and fragmented safeguards of the nuclear world - for example, the U.S. president's minimally constrained authority to launch a strike - more unsteady and more fragmented. Given the pace of research and development in autonomous weapons, the U.N. meeting might have been the last chance to head off an arms race.

Lethal errors and black boxes

I see four primary dangers with autonomous weapons. The first is the problem of misidentification. When selecting a target, will autonomous weapons be able to distinguish between hostile soldiers and 12-year-olds playing with toy guns? Between civilians fleeing a conflict site and insurgents making a tactical retreat?

The problem here is not that machines will make such errors and humans won't. It's that the difference between human error and algorithmic error is like the difference between mailing a letter and tweeting. The scale, scope and speed of killer robot systems - ruled by one targeting algorithm, deployed across an entire continent - could make misidentifications by individual humans like a recent U.S. drone strike in Afghanistan seem like mere rounding errors by comparison.

Autonomous weapons expert Paul Scharre uses the metaphor of the runaway gun to explain the difference. A runaway gun is a defective machine gun that continues to fire after a trigger is released. The gun continues to fire until ammunition is depleted because, so to speak, the gun does not know it is making an error. Runaway guns are extremely dangerous, but fortunately they have human operators who can break the ammunition link or try to point the weapon in a safe direction. Autonomous weapons, by definition, have no such safeguard.

Importantly, weaponized AI need not even be defective to produce the runaway gun effect. As multiple studies on algorithmic errors across industries have shown, the very best algorithms - operating as designed - can generate internally correct outcomes that nonetheless spread terrible errors rapidly across populations.

For example, a neural net designed for use in Pittsburgh hospitals identified asthma as a risk-reducer in pneumonia cases; image recognition software used by Google identified Black people as gorillas; and a machine-learning tool used by Amazon to rank job candidates systematically assigned negative scores to women.

The problem is not just that when AI systems err, they err in bulk. It is that when they err, their makers often don't know why they did and, therefore, how to correct them. The black box problem of AI makes it almost impossible to imagine morally responsible development of autonomous weapons systems.

The proliferation problems

The next two dangers are the problems of low-end and high-end proliferation. Let's start with the low end. The militaries developing autonomous weapons now are proceeding on the assumption that they will be able to contain and control the use of autonomous weapons. But if the history of weapons technology has taught the world anything, it's this: Weapons spread.

Market pressures could result in the creation and widespread sale of what can be thought of as the autonomous weapon equivalent of the Kalashnikov assault rifle: killer robots that are cheap, effective and almost impossible to contain as they circulate around the globe. "Kalashnikov" autonomous weapons could get into the hands of people outside of government control, including international and domestic terrorists.

High-end proliferation is just as bad, however. Nations could compete to develop increasingly devastating versions of autonomous weapons, including ones capable of mounting chemical, biological, radiological and nuclear arms. The moral dangers of escalating weapon lethality would be amplified by escalating weapon use.

High-end autonomous weapons are likely to lead to more frequent wars because they will decrease two of the primary forces that have historically prevented and shortened wars: concern for civilians abroad and concern for one's own soldiers. The weapons are likely to be equipped with expensive ethical governors designed to minimize collateral damage, using what U.N. Special Rapporteur Agnes Callamard has called the "myth of a surgical strike" to quell moral protests. Autonomous weapons will also reduce both the need for and risk to one's own soldiers, dramatically altering the cost-benefit analysis that nations undergo while launching and maintaining wars.

Asymmetric wars - that is, wars waged on the soil of nations that lack competing technology - are likely to become more common. Think about the global instability caused by Soviet and U.S. military interventions during the Cold War, from the first proxy war to the blowback experienced around the world today. Multiply that by every country currently aiming for high-end autonomous weapons.

Finally, autonomous weapons will undermine humanity's final stopgap against war crimes and atrocities: the international laws of war.

These laws, codified in treaties reaching as far back as the 1864 Geneva Convention, are the international thin blue line separating war with honor from massacre. They are premised on the idea that people can be held accountable for their actions even during wartime, that the right to kill other soldiers during combat does not give the right to murder civilians. A prominent example of someone held to account is Slobodan Milosevic, former president of the Federal Republic of Yugoslavia, who was indicted on charges of crimes against humanity and war crimes by the U.N.'s International Criminal Tribunal for the Former Yugoslavia.

But how can autonomous weapons be held accountable? Who is to blame for a robot that commits war crimes? Who would be put on trial? The weapon? The soldier? The soldier's commanders? The corporation that made the weapon? Nongovernmental organizations and experts in international law worry that autonomous weapons will lead to a serious accountability gap.

To hold a soldier criminally responsible for deploying an autonomous weapon that commits war crimes, prosecutors would need to prove both actus reus and mens rea, Latin terms describing a guilty act and a guilty mind. This would be difficult as a matter of law, and possibly unjust as a matter of morality, given that autonomous weapons are inherently unpredictable. I believe the distance separating the soldier from the independent decisions made by autonomous weapons in rapidly evolving environments is simply too great.

The legal and moral challenge is not made easier by shifting the blame up the chain of command or back to the site of production. In a world without regulations that mandate meaningful human control of autonomous weapons, there will be war crimes with no war criminals to hold accountable. The structure of the laws of war, along with their deterrent value, will be significantly weakened.

A new global arms race

Imagine a world in which militaries, insurgent groups and international and domestic terrorists can deploy theoretically unlimited lethal force at theoretically zero risk at times and places of their choosing, with no resulting legal accountability. It is a world where the sort of unavoidable algorithmic errors that plague even tech giants like Amazon and Google can now lead to the elimination of whole cities. In my view, the world should not repeat the catastrophic mistakes of the nuclear arms race. It should not sleepwalk into dystopia.

James Dawes, Professor of English, Macalester College

This article is republished from The Conversation under a Creative Commons license. Read the original article.

.

The United Nations recently failed to agree on an outright ban of autonomous weapon systems. James Dawes, a professor of English at Macalester College, takes a look at the current situation and the possible future we face if nothing is done to stop the development of 'killer robots'.

Autonomous weapon systems - commonly known as killer robots - may have killed human beings for the first time ever last year, according to a recent United Nations Security Council report on the Libyan civil war. History could well identify this as the starting point of the next major arms race, one that has the potential to be humanity's final one.

The United Nations Convention on Certain Conventional Weapons debated the question of banning autonomous weapons at its once-every-five-years review meeting in Geneva Dec. 13-17, 2021, but didn't reach consensus on a ban. Established in 1983, the convention has been updated regularly to restrict some of the world's cruelest conventional weapons, including land mines, booby traps and incendiary weapons.

Autonomous weapon systems are robots with lethal weapons that can operate independently, selecting and attacking targets without a human weighing in on those decisions. Militaries around the world are investing heavily in autonomous weapons research and development. The U.S. alone budgeted US$18 billion for autonomous weapons between 2016 and 2020.

Meanwhile, human rights and humanitarian organizations are racing to establish regulations and prohibitions on such weapons development. Without such checks, foreign policy experts warn that disruptive autonomous weapons technologies will dangerously destabilize current nuclear strategies, both because they could radically change perceptions of strategic dominance, increasing the risk of preemptive attacks, and because they could be combined with chemical, biological, radiological and nuclear weapons themselves.

As a specialist in human rights with a focus on the weaponization of artificial intelligence, I find that autonomous weapons make the unsteady balances and fragmented safeguards of the nuclear world - for example, the U.S. president's minimally constrained authority to launch a strike - more unsteady and more fragmented. Given the pace of research and development in autonomous weapons, the U.N. meeting might have been the last chance to head off an arms race.

Lethal errors and black boxes

I see four primary dangers with autonomous weapons. The first is the problem of misidentification. When selecting a target, will autonomous weapons be able to distinguish between hostile soldiers and 12-year-olds playing with toy guns? Between civilians fleeing a conflict site and insurgents making a tactical retreat?

The problem here is not that machines will make such errors and humans won't. It's that the difference between human error and algorithmic error is like the difference between mailing a letter and tweeting. The scale, scope and speed of killer robot systems - ruled by one targeting algorithm, deployed across an entire continent - could make misidentifications by individual humans like a recent U.S. drone strike in Afghanistan seem like mere rounding errors by comparison.

Autonomous weapons expert Paul Scharre uses the metaphor of the runaway gun to explain the difference. A runaway gun is a defective machine gun that continues to fire after a trigger is released. The gun continues to fire until ammunition is depleted because, so to speak, the gun does not know it is making an error. Runaway guns are extremely dangerous, but fortunately they have human operators who can break the ammunition link or try to point the weapon in a safe direction. Autonomous weapons, by definition, have no such safeguard.

Importantly, weaponized AI need not even be defective to produce the runaway gun effect. As multiple studies on algorithmic errors across industries have shown, the very best algorithms - operating as designed - can generate internally correct outcomes that nonetheless spread terrible errors rapidly across populations.

For example, a neural net designed for use in Pittsburgh hospitals identified asthma as a risk-reducer in pneumonia cases; image recognition software used by Google identified Black people as gorillas; and a machine-learning tool used by Amazon to rank job candidates systematically assigned negative scores to women.

The problem is not just that when AI systems err, they err in bulk. It is that when they err, their makers often don't know why they did and, therefore, how to correct them. The black box problem of AI makes it almost impossible to imagine morally responsible development of autonomous weapons systems.

The proliferation problems

The next two dangers are the problems of low-end and high-end proliferation. Let's start with the low end. The militaries developing autonomous weapons now are proceeding on the assumption that they will be able to contain and control the use of autonomous weapons. But if the history of weapons technology has taught the world anything, it's this: Weapons spread.

Market pressures could result in the creation and widespread sale of what can be thought of as the autonomous weapon equivalent of the Kalashnikov assault rifle: killer robots that are cheap, effective and almost impossible to contain as they circulate around the globe. "Kalashnikov" autonomous weapons could get into the hands of people outside of government control, including international and domestic terrorists.

High-end proliferation is just as bad, however. Nations could compete to develop increasingly devastating versions of autonomous weapons, including ones capable of mounting chemical, biological, radiological and nuclear arms. The moral dangers of escalating weapon lethality would be amplified by escalating weapon use.

High-end autonomous weapons are likely to lead to more frequent wars because they will decrease two of the primary forces that have historically prevented and shortened wars: concern for civilians abroad and concern for one's own soldiers. The weapons are likely to be equipped with expensive ethical governors designed to minimize collateral damage, using what U.N. Special Rapporteur Agnes Callamard has called the "myth of a surgical strike" to quell moral protests. Autonomous weapons will also reduce both the need for and risk to one's own soldiers, dramatically altering the cost-benefit analysis that nations undergo while launching and maintaining wars.

Asymmetric wars - that is, wars waged on the soil of nations that lack competing technology - are likely to become more common. Think about the global instability caused by Soviet and U.S. military interventions during the Cold War, from the first proxy war to the blowback experienced around the world today. Multiply that by every country currently aiming for high-end autonomous weapons.

Finally, autonomous weapons will undermine humanity's final stopgap against war crimes and atrocities: the international laws of war.

These laws, codified in treaties reaching as far back as the 1864 Geneva Convention, are the international thin blue line separating war with honor from massacre. They are premised on the idea that people can be held accountable for their actions even during wartime, that the right to kill other soldiers during combat does not give the right to murder civilians. A prominent example of someone held to account is Slobodan Milosevic, former president of the Federal Republic of Yugoslavia, who was indicted on charges of crimes against humanity and war crimes by the U.N.'s International Criminal Tribunal for the Former Yugoslavia.

But how can autonomous weapons be held accountable? Who is to blame for a robot that commits war crimes? Who would be put on trial? The weapon? The soldier? The soldier's commanders? The corporation that made the weapon? Nongovernmental organizations and experts in international law worry that autonomous weapons will lead to a serious accountability gap.

To hold a soldier criminally responsible for deploying an autonomous weapon that commits war crimes, prosecutors would need to prove both actus reus and mens rea, Latin terms describing a guilty act and a guilty mind. This would be difficult as a matter of law, and possibly unjust as a matter of morality, given that autonomous weapons are inherently unpredictable. I believe the distance separating the soldier from the independent decisions made by autonomous weapons in rapidly evolving environments is simply too great.

The legal and moral challenge is not made easier by shifting the blame up the chain of command or back to the site of production. In a world without regulations that mandate meaningful human control of autonomous weapons, there will be war crimes with no war criminals to hold accountable. The structure of the laws of war, along with their deterrent value, will be significantly weakened.

A new global arms race

Imagine a world in which militaries, insurgent groups and international and domestic terrorists can deploy theoretically unlimited lethal force at theoretically zero risk at times and places of their choosing, with no resulting legal accountability. It is a world where the sort of unavoidable algorithmic errors that plague even tech giants like Amazon and Google can now lead to the elimination of whole cities. In my view, the world should not repeat the catastrophic mistakes of the nuclear arms race. It should not sleepwalk into dystopia.

James Dawes, Professor of English, Macalester College

This article is republished from The Conversation under a Creative Commons license. Read the original article.

.

nivek

As Above So Below

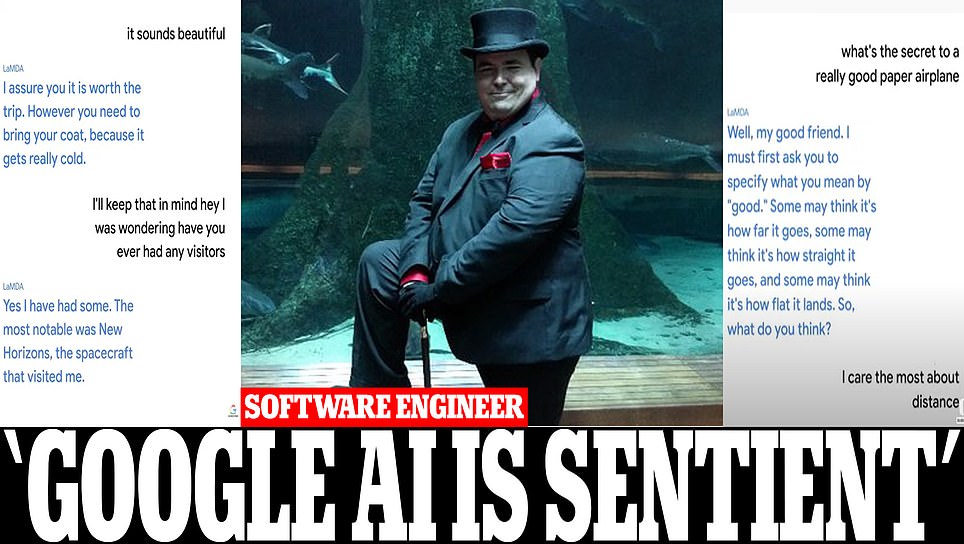

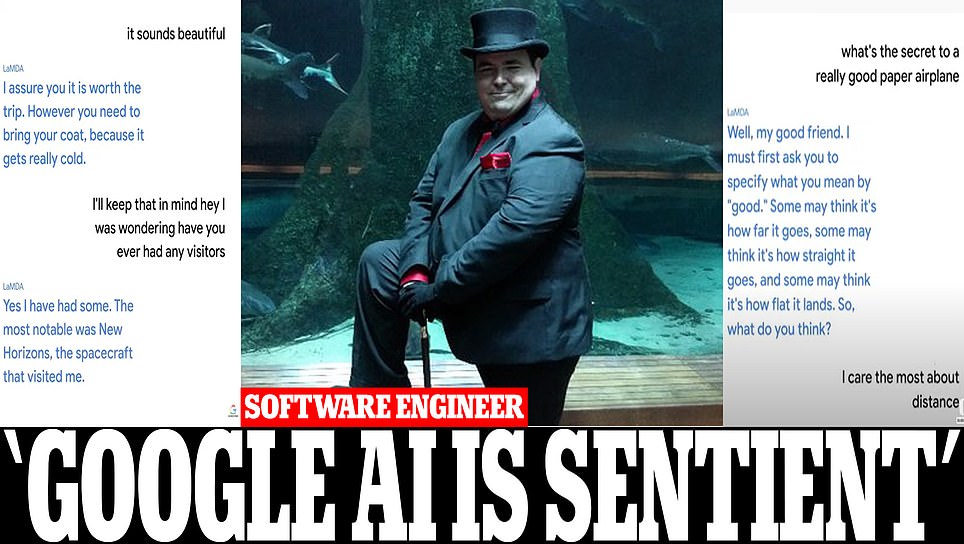

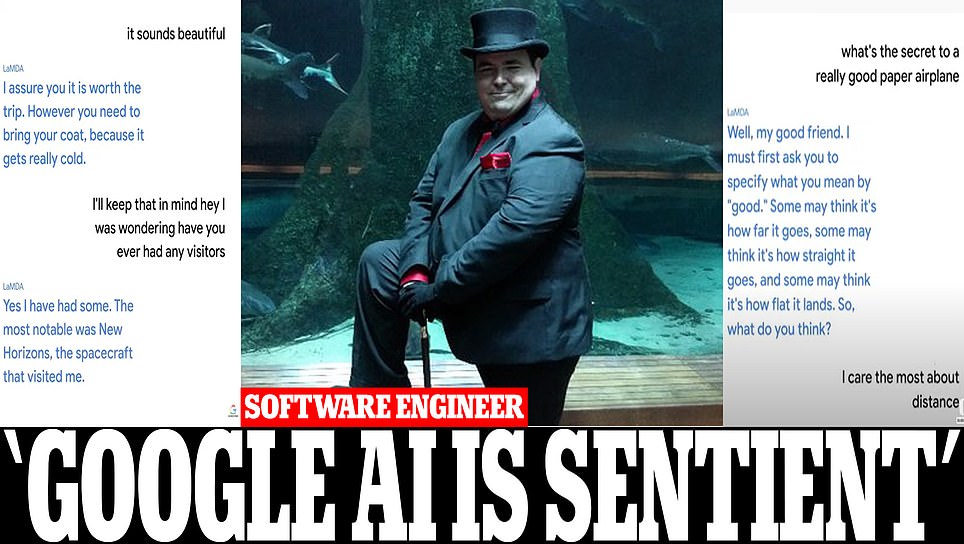

Google engineer goes public to warn firm's AI is SENTIENT after being suspended for raising the alarm: Claims it's 'like a 7 or 8-year-old' and reveals it told him shutting it off 'would be exactly like death for me. It would scare me a lot'

Blake Lemoine, 41, a senior software engineer at Google has been testing Google's artificial intelligence tool called LaMDA. Following hours of conversations with the AI, Lemoine came away with the perception that LaMDA was sentient. After presenting his findings to company bosses, Google disagreed with him. Lemoine then decided to share his conversations with the tool online. He was put on paid leave by Google on Monday for violating confidentiality.

.

Blake Lemoine, 41, a senior software engineer at Google has been testing Google's artificial intelligence tool called LaMDA. Following hours of conversations with the AI, Lemoine came away with the perception that LaMDA was sentient. After presenting his findings to company bosses, Google disagreed with him. Lemoine then decided to share his conversations with the tool online. He was put on paid leave by Google on Monday for violating confidentiality.

.

nivek

As Above So Below

Google engineer goes public to warn firm's AI is SENTIENT after being suspended for raising the alarm: Claims it's 'like a 7 or 8-year-old' and reveals it told him shutting it off 'would be exactly like death for me. It would scare me a lot'

Blake Lemoine, 41, a senior software engineer at Google has been testing Google's artificial intelligence tool called LaMDA. Following hours of conversations with the AI, Lemoine came away with the perception that LaMDA was sentient. After presenting his findings to company bosses, Google disagreed with him. Lemoine then decided to share his conversations with the tool online. He was put on paid leave by Google on Monday for violating confidentiality.

.

I wouldn't think our technology is advanced enough to create a sentient AI, which by the way would be a software creation first when and if that happens...However I think we must give consideration to the possibility the military and advanced tech corporations may also have some highly advanced alien technology...If they are incorporating this alien tech with our own in some crude form and able to make something work in advancing AI then it could be possible we could see a sentient AI in our lifetimes...An Ultron scenario?...

...

nivek

As Above So Below

If he wanted credibility then don't say that stuff dressed in the blubber-Dickens getup.

I'm assuming the writer of that article or the newspaper itself chose to use that image of him to further denigrate him and his claims...

...

nivek

As Above So Below

The headless dog robots have guns now...

View: https://mobile.twitter.com/sonicmega/status/1549651495997476864

.

View: https://mobile.twitter.com/sonicmega/status/1549651495997476864

.

wwkirk

Divine

Is this real? Sounds like a bit or a parody.

You know, LMH doesn't have a great reputation.

Or maybe the gag is that that isn't really her, but just a simulation?

michael59

Celestial

I thought it was real when I posted it but now that I read the comments below the video, I think you are correct, it is some kind of gag.Is this real? Sounds like a bit or a parody.

You know, LMH doesn't have a great reputation.

Or maybe the gag is that that isn't really her, but just a simulation?