You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Would an ETV be able to visit Earth secretly?

CasualBystander

Celestial

We can reduce the radar signature of plane down to a small bird.

Just painting planes the right colors makes them hard to spot.

These are supposed to be sophisticated aliens that understand physics enough to build lightspeed drives...

Are you implying they can't make their craft look sky blue?

Really?

You need to find better high tech aliens.

Just painting planes the right colors makes them hard to spot.

These are supposed to be sophisticated aliens that understand physics enough to build lightspeed drives...

Are you implying they can't make their craft look sky blue?

Really?

You need to find better high tech aliens.

nivek

As Above So Below

Cloaking technology decades more advanced would keep their ships invisible to our radar or any other detection equipment, but I would hazard to assume if aliens are traveling in a ship to us that their technology would be much more advanced than just decades, perhaps hundreds of years more advanced...

Filakius

Adept

I'm trying to keep the question broad and fair without anything implied.These are supposed to be sophisticated aliens that understand physics enough to build lightspeed drives...

Are you implying they can't make their craft look sky blue?

My personal answer would be yes. I think an ET craft could enter Earth's atmosphere and fly around without being detected.

Filakius

Adept

I'd like to discuss cameras, telescopic lenses, radar, satellites and all the other ways we should be able to detect UFOs, but maybe its best (and more fun) to start with space and work back to the boring stuff.

The fact that we can see asteroids in space.., does make it seem like we can detect everything all the time. As if the government and scientists can see everything in space.., and on Earth.

I also used to assume this until I looked into how it all works.

Observers find and track near Earth objects (NEO) using NASA’s space-based NEOWISE infrared telescope and ground-based telescopes around the world which can;

1) Only be used at night time and on clear nights, not sunset or sunrise and is subjected to damage, faults and repairs. (This reduces time dramatically). This particular scope which captured the images below only recently came back online after several months due to instruments being damaged by hurricane Maria.

Due to these Ground-based telescopes limitations.., based on statistical population estimates, about 74 percent of NEOs still remain to be discovered.., so there is no 24 hour, 365 monitoring of 100% of the sky. More like 25% of 40% coverage in that hemisphere in perfect conditions, zoomed in.

2) These observatories are not dedicated to scanning for asteroids. For example this one is operated by SRI International, USRA and UMET, under cooperative agreement with the National Science Foundation (NSF). Arecibo started with the dual purpose of understanding the ionosphere's F-layer while also producing a general-purpose scientific radio observatory. Scientists who want to use the observatory submit proposals that are evaluated by an independent scientific board.

Due to this small time frame of a shared observatory (huge list of scientists even military).., only single photos are taken, then compared with other single images collected over weeks and months. We cannot see a distant asteroid moving in real time because space is quite large and these objects move slow in comparison. 2 hours of observation in one night will not show much movement. The changes are subtle over time.

Its done by looking for slow changes., (Objects can fly around during that gap). Even though they are called "wide-field survey observatories".., they are very powerful telescopes that point towards different positions in the sky and track those positions for an extended time as Earth rotates. (Not monitoring the entire sky).

NASA's Planetary Defense Coordination Office is responsible for finding, tracking and characterising specifically "potentially hazardous" asteroids and comets coming near Earth. Not all. Near Earth refers to asteroids or comets within approximately 121 million miles and objects larger than about 460 feet. The 460-foot cutoff point was established by a NASA NEO survey science definition team (SDT) in 2003. Anything smaller is not deemed worthy.

3) These long range scopes (optical, radar, radio, infrared) are used to detect very large objects at a long distance. (Smaller craft, drones, debris or satellites are not detected). For an ET race to traverse space within a realistic time frame.., we assume it must travel faster than any asteroid (light speed or higher). Smaller and faster objects are too hard for long distance scopes to detect.. While zoomed in on distant objects.., it cannot monitor the skies with 100% coverage constantly with LIVE video feed as we tend to assume.

4) Radar is then used to get the clearest images. This is not perfect, for example. The black area of the asteroid is most likely a depression that did not reflect the radar beam back to Earth These single images are the best we could get of such a large object (3.6mi) 6.4 million miles away during the 15th to the 19th of December 2017.

A spaceship is a tiny fraction of the 6 kilometre giant in size would not even show up on these images. In fact.., there could be smaller objects in these images right now.., but we cannot tell. (Approx 250 ft per pixel)

5) Astronomers don't stare at the sky.., or stare through a telescope for hours scanning the sky.

When Astronomers are looking through a telescope, zoomed in on a fixed location, which covers a tiny percentage of a distant part of outer space.., they look, observe the distant star formations.., and record the data.

Here's an example from NASA's small body database. 27 observations over 4 days. Solution date Jan 2nd, 2018. This shows how they are not LIVE feeds of video being taken. Rather the scopes are connected to computers.., and single images are taken then compared over days (by computers) to see a change. Anything flying in between shots (or even in one shot, but not the rest) would not be recorded over the days, most likely seen as an anomaly, or camera glitch.

From memory bigger has entered and burnt up (hence NASA size limit), but I'll have to find sources first. This article says it was the size of a lorry. Asteroid set to make a 'close' approach to Earth in hours | Daily Mail Online

This was spotted by an optical 1.52 m Cassegrain reflector telescope.., most likely missed by the larger radio telescopes used to initially spot slight differences in "star" movements much much further away.

The UFO phenomenon is more localised, generally reported being 7000 ft and under.., which would be like moving a pen or even a hand past a pair binoculars, and you see nothing but a blur (if that) as if the hand did not pass by the binoculars at all. They also do not see 100% coverage of the sky.., its like a pin hole in a piece of paper, but focused way out into space. This is why someone standing outside the observatory would see a UFO, but the astronomer didn't (but could see Venus, therefore it was Venus).

Astronomers do not really look through telescopes as much as everybody thinks. The very few remote stations are accessed via SSH or VNC on a computer wherever you live.

Competition for telescope time is fiercely prioritised. Even if you as an astronomer managed to snag 2 hours next week, you would be focused on your specific task of studying a distant solar system's planet wobble past it's sun, or whatever your project is.

People have a romanticised view of astronomers, sitting with a group of nice people sipping coffee and staring through scopes.., but they are mostly run and monitored by computers. Most professional astronomers rely on database queries instead.

MIT Lincoln Laboratory: LINEAR

The LINEAR is not currently being used for NEOs.., but tested. They have 2 devices for optical deep space viewing. This would only cover 40% of the Northern Hemisphere, only at night, with no cloud cover. Subject to damage, repairs and updates reducing the 100% 24/7 coverage drastically.

The device uses one CCD (Charged Couple Device).., which are found in modern camera's (my SONY had 3 CCD's). Its an optical scope.., used only at night time that sends data back to a computer to "generate" observations. The same process is used.., where single images are taken and compared over many nights of data to make an "observation". Nothing is detected LIVE as we would assume.

Pan-Starr is cool, but also uses the same slow technique of "detecting differences from previous observations of the same areas of the sky, " over days and weeks to make an observation.

Basically.., something could fly past all these devices and never be seen. There just not as LIVE as we think they are.

Pan-STARRS - Wikipedia

Amateur astronomers use powerful optical telescopes to view light. Can only be used at night time, without cloud cover. Light pollution from cities and full moon make visibility poor.

In order to see a satellite beyond the moon (for example)., would require the sun's light to reflect off the metal.., back to the telescope. The small amount of light (when at the right angle) would not be enough to see after a certain distance

Consider trying to track an (alleged) fast ETV through an optical scope designed for stationary objects (set tripod, motorised tracking, equatorial pans) and various distances with objects (car, house etc)

Saturn through a 4" refractor telescope. Only good for long distance objects.., which means a very small focal point (field of view / perspective). A rocket or spaceship could fly past, and unless its in focus will just be a brief blur.

S.E.T.I.

From my understanding (not a SETI fan) they listen for radio frequencies under the impression that ET's would be sending signals this way. They do not scan all of space.., but a very small area. Some distant zone considered to be most likely habitable. A very very very small area from memory. This is what turned me off them actually. A complete waste of time and money imo.

Radiowaves travel at the speed of light.., and this sounds pretty damn fast. But really, in the grand scheme of things it isn't.

We have been broadcasting radio signals into space via TV stations and radio transmissions for about 80 years. That means our first transmission is 80 light years away, which seems like a long distance. It is.., but it isn't...

FURTHER READING

Various telescope types: https://web.njit.edu/~cao/Phys320_L6.pdf

The fact that we can see asteroids in space.., does make it seem like we can detect everything all the time. As if the government and scientists can see everything in space.., and on Earth.

I also used to assume this until I looked into how it all works.

Observers find and track near Earth objects (NEO) using NASA’s space-based NEOWISE infrared telescope and ground-based telescopes around the world which can;

1) Only be used at night time and on clear nights, not sunset or sunrise and is subjected to damage, faults and repairs. (This reduces time dramatically). This particular scope which captured the images below only recently came back online after several months due to instruments being damaged by hurricane Maria.

Due to these Ground-based telescopes limitations.., based on statistical population estimates, about 74 percent of NEOs still remain to be discovered.., so there is no 24 hour, 365 monitoring of 100% of the sky. More like 25% of 40% coverage in that hemisphere in perfect conditions, zoomed in.

2) These observatories are not dedicated to scanning for asteroids. For example this one is operated by SRI International, USRA and UMET, under cooperative agreement with the National Science Foundation (NSF). Arecibo started with the dual purpose of understanding the ionosphere's F-layer while also producing a general-purpose scientific radio observatory. Scientists who want to use the observatory submit proposals that are evaluated by an independent scientific board.

Due to this small time frame of a shared observatory (huge list of scientists even military).., only single photos are taken, then compared with other single images collected over weeks and months. We cannot see a distant asteroid moving in real time because space is quite large and these objects move slow in comparison. 2 hours of observation in one night will not show much movement. The changes are subtle over time.

Its done by looking for slow changes., (Objects can fly around during that gap). Even though they are called "wide-field survey observatories".., they are very powerful telescopes that point towards different positions in the sky and track those positions for an extended time as Earth rotates. (Not monitoring the entire sky).

NASA's Planetary Defense Coordination Office is responsible for finding, tracking and characterising specifically "potentially hazardous" asteroids and comets coming near Earth. Not all. Near Earth refers to asteroids or comets within approximately 121 million miles and objects larger than about 460 feet. The 460-foot cutoff point was established by a NASA NEO survey science definition team (SDT) in 2003. Anything smaller is not deemed worthy.

3) These long range scopes (optical, radar, radio, infrared) are used to detect very large objects at a long distance. (Smaller craft, drones, debris or satellites are not detected). For an ET race to traverse space within a realistic time frame.., we assume it must travel faster than any asteroid (light speed or higher). Smaller and faster objects are too hard for long distance scopes to detect.. While zoomed in on distant objects.., it cannot monitor the skies with 100% coverage constantly with LIVE video feed as we tend to assume.

4) Radar is then used to get the clearest images. This is not perfect, for example. The black area of the asteroid is most likely a depression that did not reflect the radar beam back to Earth These single images are the best we could get of such a large object (3.6mi) 6.4 million miles away during the 15th to the 19th of December 2017.

A spaceship is a tiny fraction of the 6 kilometre giant in size would not even show up on these images. In fact.., there could be smaller objects in these images right now.., but we cannot tell. (Approx 250 ft per pixel)

5) Astronomers don't stare at the sky.., or stare through a telescope for hours scanning the sky.

When Astronomers are looking through a telescope, zoomed in on a fixed location, which covers a tiny percentage of a distant part of outer space.., they look, observe the distant star formations.., and record the data.

Here's an example from NASA's small body database. 27 observations over 4 days. Solution date Jan 2nd, 2018. This shows how they are not LIVE feeds of video being taken. Rather the scopes are connected to computers.., and single images are taken then compared over days (by computers) to see a change. Anything flying in between shots (or even in one shot, but not the rest) would not be recorded over the days, most likely seen as an anomaly, or camera glitch.

From memory bigger has entered and burnt up (hence NASA size limit), but I'll have to find sources first. This article says it was the size of a lorry. Asteroid set to make a 'close' approach to Earth in hours | Daily Mail Online

This was spotted by an optical 1.52 m Cassegrain reflector telescope.., most likely missed by the larger radio telescopes used to initially spot slight differences in "star" movements much much further away.

The UFO phenomenon is more localised, generally reported being 7000 ft and under.., which would be like moving a pen or even a hand past a pair binoculars, and you see nothing but a blur (if that) as if the hand did not pass by the binoculars at all. They also do not see 100% coverage of the sky.., its like a pin hole in a piece of paper, but focused way out into space. This is why someone standing outside the observatory would see a UFO, but the astronomer didn't (but could see Venus, therefore it was Venus).

Astronomers do not really look through telescopes as much as everybody thinks. The very few remote stations are accessed via SSH or VNC on a computer wherever you live.

Competition for telescope time is fiercely prioritised. Even if you as an astronomer managed to snag 2 hours next week, you would be focused on your specific task of studying a distant solar system's planet wobble past it's sun, or whatever your project is.

People have a romanticised view of astronomers, sitting with a group of nice people sipping coffee and staring through scopes.., but they are mostly run and monitored by computers. Most professional astronomers rely on database queries instead.

MIT Lincoln Laboratory: LINEAR

The LINEAR is not currently being used for NEOs.., but tested. They have 2 devices for optical deep space viewing. This would only cover 40% of the Northern Hemisphere, only at night, with no cloud cover. Subject to damage, repairs and updates reducing the 100% 24/7 coverage drastically.

The device uses one CCD (Charged Couple Device).., which are found in modern camera's (my SONY had 3 CCD's). Its an optical scope.., used only at night time that sends data back to a computer to "generate" observations. The same process is used.., where single images are taken and compared over many nights of data to make an "observation". Nothing is detected LIVE as we would assume.

Pan-Starr is cool, but also uses the same slow technique of "detecting differences from previous observations of the same areas of the sky, " over days and weeks to make an observation.

Basically.., something could fly past all these devices and never be seen. There just not as LIVE as we think they are.

Pan-STARRS - Wikipedia

Amateur astronomers use powerful optical telescopes to view light. Can only be used at night time, without cloud cover. Light pollution from cities and full moon make visibility poor.

In order to see a satellite beyond the moon (for example)., would require the sun's light to reflect off the metal.., back to the telescope. The small amount of light (when at the right angle) would not be enough to see after a certain distance

Consider trying to track an (alleged) fast ETV through an optical scope designed for stationary objects (set tripod, motorised tracking, equatorial pans) and various distances with objects (car, house etc)

Saturn through a 4" refractor telescope. Only good for long distance objects.., which means a very small focal point (field of view / perspective). A rocket or spaceship could fly past, and unless its in focus will just be a brief blur.

S.E.T.I.

From my understanding (not a SETI fan) they listen for radio frequencies under the impression that ET's would be sending signals this way. They do not scan all of space.., but a very small area. Some distant zone considered to be most likely habitable. A very very very small area from memory. This is what turned me off them actually. A complete waste of time and money imo.

Radiowaves travel at the speed of light.., and this sounds pretty damn fast. But really, in the grand scheme of things it isn't.

We have been broadcasting radio signals into space via TV stations and radio transmissions for about 80 years. That means our first transmission is 80 light years away, which seems like a long distance. It is.., but it isn't...

FURTHER READING

Various telescope types: https://web.njit.edu/~cao/Phys320_L6.pdf

Last edited:

Filakius

Adept

Why can't we find missing planes like flight MH-370??

There are only around 10 US, Russian and European satellites capable of such detailed images. They each orbit Earth roughly every hour and a half, imaging a strip a few hundred kilometres wide as they go. “Each one probably covers a few per cent of the Earth every day.

They could have taken an image of the downed plane. We don’t know the exact capabilities of these satellites, but they are likely to take sharper pictures than the civilian equivalent, They are also probably equipped with more advanced colour filters and the NRO is likely to have advanced image analysis software, both of which could help pick out wreckage from the sea.

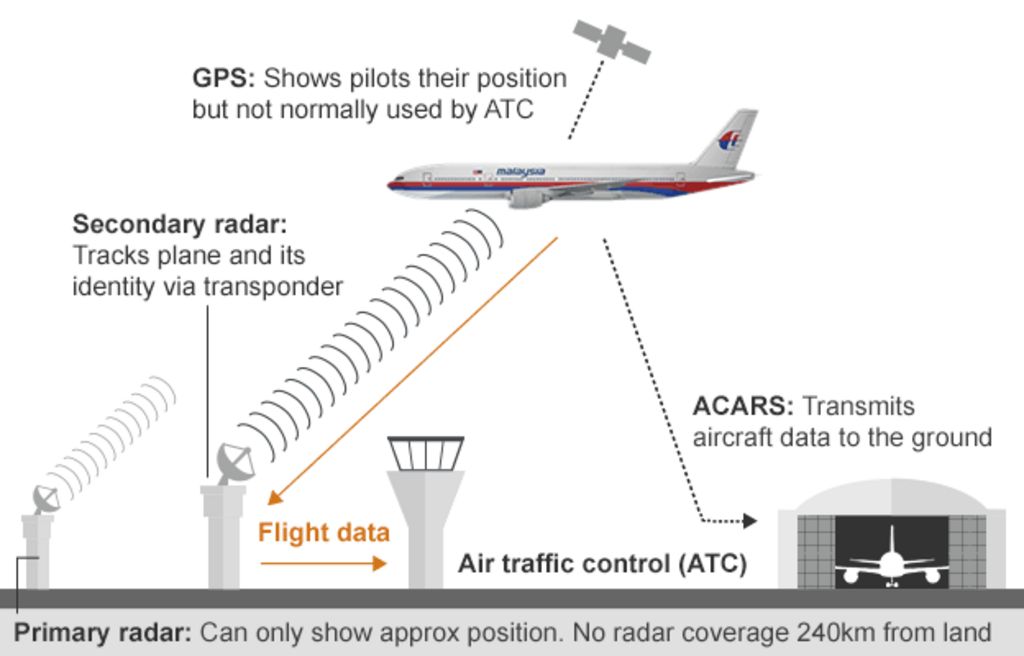

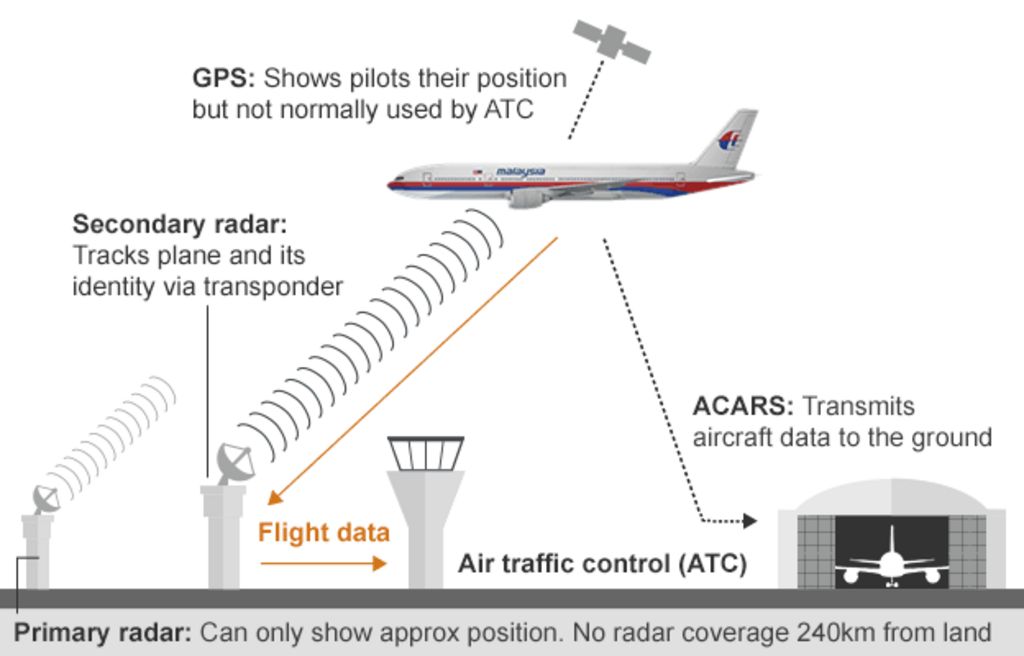

Planes are usually tracked by;

- ADS-B is less common with only 35% of planes equipped in the US.

- MLAT, 99% of Europe is covered, only parts of the US are. At least four receivers are needed to calculate the position of an aircraft

- TAR (primary) radar relies on radio waves reflecting off metallic objects and is effective within a short range from the radar head 160 nmiles away

- En route radar, or secondary surveillance radar, relies on an aircraft having a transponder which transmits a data signal. The signal is received and interrogated by a ground station ranging from 250 nmiles (463 km) in radius and up to 100 000 ft (30 km). Not very much at all.

The ease with which Malaysia Airlines flight MH370 disappeared from radar systems illustrates how modern aviation relies on ageing ground infrastructure. The inability of 26 nations to find a 250-tonne Boeing 777 has shocked an increasingly connected world and exposed flaws in the use of radar, which fades over oceans and deserts.

We track our cars, we track our kids' cell phones, but we can't track airplanes when they are over oceans or other remote areas. Satellites provide the obvious answer, experts say. However the overhaul of current systems would be costly

All planes have a radar transponder that squawks their identity and location via terrestrial radio signals. But once they pass out of range of land-based radar, a few hundred kilometres from the shore, aircraft are often on their own until they’re picked up by the next receiver.

Planes are big, but the ocean is much bigger and searching can be difficult. It took two years to find the wreckage of an Air France airliner that went down in the mid-Atlantic in 2009. And it’s not just planes that disappear into the abyss. On 4 February 2013, a 1400-tonne ocean liner, the Lyubov Orlova, went missing and has never been found.

What about Spy satellites?

The US National Reconnaissance Office (NRO) has an extensive network of spy satellites that cover the globe and could have taken an image of the downed plane. We don’t know the exact capabilities of these satellites, but they are likely to take sharper pictures than the civilian equivalent, They are also probably equipped with more advanced colour filters and the NRO is likely to have advanced image analysis software, both of which could help pick out wreckage from the sea.

So could the NRO have a picture of the plane?

It is not the NRO’s job to track commercial airliners. The satellites’ orbits are public knowledge and some have passed close to the plane’s last known location, but they may not have been imaging at the time. If the agency does have a picture, it would probably quietly pass that information to the relevant authorities, says McDowell.

Many satellites don’t take pictures in visible light, but use other wavelengths instead. The NRO’s infrared satellites look for the heat signatures of missile launches around the world, and could potentially see a plane exploded in mid air. According to The New York Times, the US Department of Defense has checked the surveillance data and found no evidence of an explosion.

"Using a system that looks for flashes around the world, the Pentagon reviewed preliminary surveillance data from the area where the plane disappeared and saw no evidence of an explosion,"

I had a look through pretty much all the current ICBM programmes allied forces have, all of which rely on ground based radar (PAC-3, Aegis, THAAD etc) to detect missiles. But for Intercontinental ballistic missiles, such as the one tested by North Korea, fly far too high and fast for these systems to engage with. This is where GBMD comes onto the scene. It mostly relies on various radar.., but I'll ignore them to discuss the 3 satellite programmes being used..

Ground Based Midcourse Defense. (GBMD)

The Ground-Based Midcourse Defense (GMD) program uses land-based missiles to intercept incoming ballistic missiles in the middle of their flight, outside the atmosphere. The well-known Patriot missiles provide what’s known as terminal-phase defense options, while longer-reach options like the land-based THAAD intercept missiles coming down. Even so, their sensors and flight ranges are best suited to defense against shorter range missiles launched from in-theater. GBMC uses ground-based radar, backed by 3 different satellite programmes.

1) The Defense Support Program (DSP)

First launched in 1970 is a constellation of satellites in geosynchronous orbit (GEO) that used infrared sensor to detect heat from missile and booster plumes against the Earth's background.. The satellites include a spinning sensor with short- and mid-wave infrared radars that have a 10-second revisit rate, though they are not dynamically taskable. Each unit is estimated to cost around $400 million. Due to be superceded by the Space-based Infrared System. (See 3 below)

2) Space Tracking and Surveillance System (STSS)

A space-based system developed and operated by the Missile Defense Agency that detects and tracks ballistic missiles. This system is an experimental component of the U.S. Ballistic Missile Defense System (BMDS) and STSS serves as a complement to other U.S. space-based platforms.

The aim of the Space Tracking and Surveillance System is to track missiles through all three phases of flight (boost, midcourse, and terminal); discriminate between warheads and decoys; transmit data to other systems that will be used to cue radars and provide intercept handovers; and provide data for missile defense interceptors to hit their target.

The STSS constellation orbits at 1,350km with a 58-degree incline and has a two-hour orbital period. The sensors on the two satellites detect visible and infrared light. and has three main components: a wide-view acquisition sensor, a narrow-view tracking sensor, and a signal and data processor subsystem.

The wide-view acquisition sensor will detect missiles during their boost phase. (No ground view)

Once it reaches its midcourse phase, STSS follows its trajectory through space with a narrow-view tracker. While both the wide- and narrow-view sensors hone in on a missile, they send information to the system’s signal and data processor subsystem which is capable of sifting through some 2.1 gigabits of data per second. This subsystem can detect and track over 100 objects at a time and will be able to determine what objects in space are missiles or warheads

The goal of an operational STSS is to track missiles through all three phases; discriminate between warheads and decoys; transmit data to other systems

3) Space-based Infrared System (SBIRS)

This system is intended to replace the aging DSP system of satellites. SBIRS satellites are able to scan large swaths of territory to detect missile activity and can also hone in on areas of interest for lower-scale activities, including launches of tactical ballistic missiles. These sensors are independently tasked, meaning the satellite can both scan a wide territory and fixate on a particular area of concern simultaneously.

The first satellite, SBIRS GEO-1, launched in May 2011 and was followed in March 2013 by the SBIRS GEO-2 satellite. July 2015, third satellite and fourth satellite first, on October 2016.

In addition to the dedicated satellites, the system also includes two missile warning sensors hosted on classified satellites in HEO that were launched in November 2006 (includes a scanning sensor composed of short- and mid-wave infrared radars that can see close to the ground) and a fourth satellite in June 2008.

The constellation has a continuous view of all of the earth’s surface, which it images every 10 seconds while searching for infrared (IR) activity indicating heat signatures.5 SBIRS is able to detect missile launches faster than any other system and can identify the missile’s type, burnout velocity, trajectory, and point of impact

The system was initially designed to include satellites in low earth orbit. However, this program was incorporated into the STSS program in 2001 and handed over to the Missile Defense Agency (MDA) and is intended to replace the Defense Support Program (1 see above)

SBIRS GEO was deployed in 2011 and consists of both taskable and nontaskable sensors with both scanning and staring payloads with short- and mid-wave infrared radars. It is able to both have a hemispheric ground of view as well as see very close to the ground.

SBIRS HEO, launched in 2006, was the first deployed asset.

It is re-taskable and includes a scanning sensor composed of short- and mid-wave infrared radars that can see close to the ground.

As of 2015, the system’s current total program cost was almost $19B for six satellites.

As of 2015, two of the four planned satellites are in orbit as well as three complementary scanning sensors on intelligence satellites in HEO

SBIRS is positioned so that it would be the first U.S. asset to detect a ballistic missile launch. Once it detects significant activity, that information is transmitted to Air Force Space Command in Colorado and subsequently to North American Aerospace Defense Command (NORAD) and other relevant parts of the military who will decide whether the launch threatens the United States or its interests.

The science is in the growing set of algorithms used to manipulate data collected by the system. Work in the “battlespace awareness” mission for satellite IR data can take hours today.

The only image released for public use from the Sbirs system is this one.

It captures the heat plume emitted by a Delta IV predawn launch. The plume is readily visible against the backdrop of Earth, which in the early morning hours sees little heat and sunlight.

SBIRS OPS CENTER

The ops center is a modern, wide space dotted with computer consoles. Personnel in an intelligence cell are of to the side. A space in the center will be occupied by each shift’s director

The gravity of the mission contrasts with the youth of its operators at Buckley, most of whom were born after the ending of the Cold War that drove development of the missile warning and defense architecture. The average Sbirs operator is about 20 years old and has about six months’ experience working the console. The ops fl oor is populated by a multinational presence, including British, Australian and Canadian military of cials. These operators work 12- hr. shifts, constantly monitoring computer screens. The Sbirs operators are divided into four areas aligned with its four missions: missile defense, missile warning, technical intelligence and battlespace awareness

NON - MILITARY

Factoring in things like maintenance, cloud cover and the fact that they don’t take images all the time, that probably means at most 5 per cent of the planet is imaged in high-resolution each day. Some areas, such as cities, are imaged much more than others, this can be seen in Goggle maps where areas can be low res and years old.. "Open ocean is not what you’re taking pictures of" Jonathan McDowell of the Harvard-Smithsonian Center For Astrophysics in Cambridge, Massachusetts.

Ball Aerospace is the maker of Maps- and Earth-related imagery satellites and it’s now started working on the Worldview-3 satellite for commercial satellite operator DigitalGlobe. Ball project manager Jeff Dierks, who has worked on most satellites opperated by companies that sell images to Google, says the technology used by these gigantic flying cameras is rather basic. “At its simplest, it is a decent resolution digital camera in space,” he said and explained that instead of taking individual pictures it takes continuous images “along thin strips of land or sea.”

Commercial images such as the ones Google Maps and Google Earth use, meanwhile, will only show objects that measure 50cm (20 inches).

Weather satellites take pictures of the entire planet each day, but only once per hour at very low resolution.

All of these satellites are used for very specific purposes. Missile watch is taken very seriously and the technology is not used as a toy or for other purposes.

If you have ever looked through binoculars or a telescope and waved your hand in front of the lens, and passes by like an almost invisible blur.., the same applies for all telescopes and lenses. This means if the camera has a wide field of view (the image of Australia).., then each pixel is too small to see a car, plane, boat of a potential UFO. The more zoomed in.., the shorter the FOV etc.., but we'll get into that boring stuff later.

Last edited:

starsfall

Believer

Yes, I think they'd be able to infiltrate our skies with ease, probably wielding technology we could only dream of... if a malevolent race of ET's wanted to mess up our day, I'm pretty positive they'd be able to position an armada above above our planet without anyone noticing. Even if something did pick it up and was caught, they would probably know what kind of technology we have and what we use it for, and would be able to avoid or bend it to their own uses.

Rick Hunter

Celestial

Military and commercial satellites create so much data every day that it would be a tremendous undertaking for a pair of human eyes to look at every image. I would guess that, at least as far as the military is concerned, they don't pay much attention to anything that is not of terrestrial defense significance, i.e. missiles and aircraft. They probably catch UFOs fairly often and tell lower ranking staff that they are just anomalies to be ignored, while storing the data somewhere that those in the know can make use of it.

humanoidlord

ce3 researcher

they are too fast to be detected with any passive system, and its hard even with active ones

also they arent extraterrestrial, the answer is far more out there

also they arent extraterrestrial, the answer is far more out there

nivek

As Above So Below

they are too fast to be detected with any passive system, and its hard even with active ones

also they arent extraterrestrial, the answer is far more out there

Some are extraterrestrial, but many are not, especially the bad ones, from what I've surmised...

humanoidlord

ce3 researcher

nope all ufos have paranormal elementsSome are extraterrestrial, but many are not, especially the bad ones, from what I've surmised...

they are clearly interdimensional

though i have heard most abduction experiences are just humans torturing people

nivek

As Above So Below

nope all ufos have paranormal elements

they are clearly interdimensional

though i have heard most abduction experiences are just humans torturing people

The extraterrestrial types do not fly their ships within our atmosphere...

CasualBystander

Celestial

Why can't we find missing planes like flight MH-370??

There are only around 10 US, Russian and European satellites capable of such detailed images. They each orbit Earth roughly every hour and a half, imaging a strip a few hundred kilometres wide as they go. “Each one probably covers a few per cent of the Earth every day.

They could have taken an image of the downed plane. We don’t know the exact capabilities of these satellites, but they are likely to take sharper pictures than the civilian equivalent, They are also probably equipped with more advanced colour filters and the NRO is likely to have advanced image analysis software, both of which could help pick out wreckage from the sea.

Planes are usually tracked by;

- ADS-B is less common with only 35% of planes equipped in the US.

- MLAT, 99% of Europe is covered, only parts of the US are. At least four receivers are needed to calculate the position of an aircraft

- TAR (primary) radar relies on radio waves reflecting off metallic objects and is effective within a short range from the radar head 160 nmiles away

- En route radar, or secondary surveillance radar, relies on an aircraft having a transponder which transmits a data signal. The signal is received and interrogated by a ground station ranging from 250 nmiles (463 km) in radius and up to 100 000 ft (30 km). Not very much at all.

The ease with which Malaysia Airlines flight MH370 disappeared from radar systems illustrates how modern aviation relies on ageing ground infrastructure. The inability of 26 nations to find a 250-tonne Boeing 777 has shocked an increasingly connected world and exposed flaws in the use of radar, which fades over oceans and deserts.

We track our cars, we track our kids' cell phones, but we can't track airplanes when they are over oceans or other remote areas. Satellites provide the obvious answer, experts say. However the overhaul of current systems would be costly

All planes have a radar transponder that squawks their identity and location via terrestrial radio signals. But once they pass out of range of land-based radar, a few hundred kilometres from the shore, aircraft are often on their own until they’re picked up by the next receiver.

Planes are big, but the ocean is much bigger and searching can be difficult. It took two years to find the wreckage of an Air France airliner that went down in the mid-Atlantic in 2009. And it’s not just planes that disappear into the abyss. On 4 February 2013, a 1400-tonne ocean liner, the Lyubov Orlova, went missing and has never been found.

What about Spy satellites?

The US National Reconnaissance Office (NRO) has an extensive network of spy satellites that cover the globe and could have taken an image of the downed plane. We don’t know the exact capabilities of these satellites, but they are likely to take sharper pictures than the civilian equivalent, They are also probably equipped with more advanced colour filters and the NRO is likely to have advanced image analysis software, both of which could help pick out wreckage from the sea.

So could the NRO have a picture of the plane?

It is not the NRO’s job to track commercial airliners. The satellites’ orbits are public knowledge and some have passed close to the plane’s last known location, but they may not have been imaging at the time. If the agency does have a picture, it would probably quietly pass that information to the relevant authorities, says McDowell.

Many satellites don’t take pictures in visible light, but use other wavelengths instead. The NRO’s infrared satellites look for the heat signatures of missile launches around the world, and could potentially see a plane exploded in mid air. According to The New York Times, the US Department of Defense has checked the surveillance data and found no evidence of an explosion.

"Using a system that looks for flashes around the world, the Pentagon reviewed preliminary surveillance data from the area where the plane disappeared and saw no evidence of an explosion,"

I had a look through pretty much all the current ICBM programmes allied forces have, all of which rely on ground based radar (PAC-3, Aegis, THAAD etc) to detect missiles. But for Intercontinental ballistic missiles, such as the one tested by North Korea, fly far too high and fast for these systems to engage with. This is where GBMD comes onto the scene. It mostly relies on various radar.., but I'll ignore them to discuss the 3 satellite programmes being used..

Ground Based Midcourse Defense. (GBMD)

The Ground-Based Midcourse Defense (GMD) program uses land-based missiles to intercept incoming ballistic missiles in the middle of their flight, outside the atmosphere. The well-known Patriot missiles provide what’s known as terminal-phase defense options, while longer-reach options like the land-based THAAD intercept missiles coming down. Even so, their sensors and flight ranges are best suited to defense against shorter range missiles launched from in-theater. GBMC uses ground-based radar, backed by 3 different satellite programmes.

1) The Defense Support Program (DSP)

First launched in 1970 is a constellation of satellites in geosynchronous orbit (GEO) that used infrared sensor to detect heat from missile and booster plumes against the Earth's background.. The satellites include a spinning sensor with short- and mid-wave infrared radars that have a 10-second revisit rate, though they are not dynamically taskable. Each unit is estimated to cost around $400 million. Due to be superceded by the Space-based Infrared System. (See 3 below)

2) Space Tracking and Surveillance System (STSS)

A space-based system developed and operated by the Missile Defense Agency that detects and tracks ballistic missiles. This system is an experimental component of the U.S. Ballistic Missile Defense System (BMDS) and STSS serves as a complement to other U.S. space-based platforms.

The aim of the Space Tracking and Surveillance System is to track missiles through all three phases of flight (boost, midcourse, and terminal); discriminate between warheads and decoys; transmit data to other systems that will be used to cue radars and provide intercept handovers; and provide data for missile defense interceptors to hit their target.

The STSS constellation orbits at 1,350km with a 58-degree incline and has a two-hour orbital period. The sensors on the two satellites detect visible and infrared light. and has three main components: a wide-view acquisition sensor, a narrow-view tracking sensor, and a signal and data processor subsystem.

The wide-view acquisition sensor will detect missiles during their boost phase. (No ground view)

Once it reaches its midcourse phase, STSS follows its trajectory through space with a narrow-view tracker. While both the wide- and narrow-view sensors hone in on a missile, they send information to the system’s signal and data processor subsystem which is capable of sifting through some 2.1 gigabits of data per second. This subsystem can detect and track over 100 objects at a time and will be able to determine what objects in space are missiles or warheads

The goal of an operational STSS is to track missiles through all three phases; discriminate between warheads and decoys; transmit data to other systems

3) Space-based Infrared System (SBIRS)

This system is intended to replace the aging DSP system of satellites. SBIRS satellites are able to scan large swaths of territory to detect missile activity and can also hone in on areas of interest for lower-scale activities, including launches of tactical ballistic missiles. These sensors are independently tasked, meaning the satellite can both scan a wide territory and fixate on a particular area of concern simultaneously.

The first satellite, SBIRS GEO-1, launched in May 2011 and was followed in March 2013 by the SBIRS GEO-2 satellite. July 2015, third satellite and fourth satellite first, on October 2016.

In addition to the dedicated satellites, the system also includes two missile warning sensors hosted on classified satellites in HEO that were launched in November 2006 (includes a scanning sensor composed of short- and mid-wave infrared radars that can see close to the ground) and a fourth satellite in June 2008.

The constellation has a continuous view of all of the earth’s surface, which it images every 10 seconds while searching for infrared (IR) activity indicating heat signatures.5 SBIRS is able to detect missile launches faster than any other system and can identify the missile’s type, burnout velocity, trajectory, and point of impact

The system was initially designed to include satellites in low earth orbit. However, this program was incorporated into the STSS program in 2001 and handed over to the Missile Defense Agency (MDA) and is intended to replace the Defense Support Program (1 see above)

SBIRS GEO was deployed in 2011 and consists of both taskable and nontaskable sensors with both scanning and staring payloads with short- and mid-wave infrared radars. It is able to both have a hemispheric ground of view as well as see very close to the ground.

SBIRS HEO, launched in 2006, was the first deployed asset.

It is re-taskable and includes a scanning sensor composed of short- and mid-wave infrared radars that can see close to the ground.

As of 2015, the system’s current total program cost was almost $19B for six satellites.

As of 2015, two of the four planned satellites are in orbit as well as three complementary scanning sensors on intelligence satellites in HEO

SBIRS is positioned so that it would be the first U.S. asset to detect a ballistic missile launch. Once it detects significant activity, that information is transmitted to Air Force Space Command in Colorado and subsequently to North American Aerospace Defense Command (NORAD) and other relevant parts of the military who will decide whether the launch threatens the United States or its interests.

The science is in the growing set of algorithms used to manipulate data collected by the system. Work in the “battlespace awareness” mission for satellite IR data can take hours today.

The only image released for public use from the Sbirs system is this one.

It captures the heat plume emitted by a Delta IV predawn launch. The plume is readily visible against the backdrop of Earth, which in the early morning hours sees little heat and sunlight.

SBIRS OPS CENTER

The ops center is a modern, wide space dotted with computer consoles. Personnel in an intelligence cell are of to the side. A space in the center will be occupied by each shift’s director

The gravity of the mission contrasts with the youth of its operators at Buckley, most of whom were born after the ending of the Cold War that drove development of the missile warning and defense architecture. The average Sbirs operator is about 20 years old and has about six months’ experience working the console. The ops fl oor is populated by a multinational presence, including British, Australian and Canadian military of cials. These operators work 12- hr. shifts, constantly monitoring computer screens. The Sbirs operators are divided into four areas aligned with its four missions: missile defense, missile warning, technical intelligence and battlespace awareness

NON - MILITARY

Factoring in things like maintenance, cloud cover and the fact that they don’t take images all the time, that probably means at most 5 per cent of the planet is imaged in high-resolution each day. Some areas, such as cities, are imaged much more than others, this can be seen in Goggle maps where areas can be low res and years old.. "Open ocean is not what you’re taking pictures of" Jonathan McDowell of the Harvard-Smithsonian Center For Astrophysics in Cambridge, Massachusetts.

Ball Aerospace is the maker of Maps- and Earth-related imagery satellites and it’s now started working on the Worldview-3 satellite for commercial satellite operator DigitalGlobe. Ball project manager Jeff Dierks, who has worked on most satellites opperated by companies that sell images to Google, says the technology used by these gigantic flying cameras is rather basic. “At its simplest, it is a decent resolution digital camera in space,” he said and explained that instead of taking individual pictures it takes continuous images “along thin strips of land or sea.”

Commercial images such as the ones Google Maps and Google Earth use, meanwhile, will only show objects that measure 50cm (20 inches).

Weather satellites take pictures of the entire planet each day, but only once per hour at very low resolution.

All of these satellites are used for very specific purposes. Missile watch is taken very seriously and the technology is not used as a toy or for other purposes.

If you have ever looked through binoculars or a telescope and waved your hand in front of the lens, and passes by like an almost invisible blur.., the same applies for all telescopes and lenses. This means if the camera has a wide field of view (the image of Australia).., then each pixel is too small to see a car, plane, boat of a potential UFO. The more zoomed in.., the shorter the FOV etc.., but we'll get into that boring stuff later.

Let's discuss NEOWISE.

First you have to understand black body radiation.

The Sun is about 5600 K, the earth emits at about 255K (slightly warmer than illustrated) the surface is actually 289K on average. At earth orbit an average object (like the moon) is 255K.

What happens with an instrument trying to detect 3.4 - 22 μm (the range of the WISE 4 instrument package) at room temperature? The detector saturates each and every image by self-emission.

Light telescopes on earth work fine. Infrared is another story. Trying to image 22 μm on a 289K planet is like trying to image light with a detector operating at 3000K (IE the detector is a light bulb and self saturates).

So the WISE instruments have to be cooled to operate. The original mission used solid hydrogen evaporation to cool the instrument to 8K. IT was able to detect objects warmer than 70–100 K. The hydrogen ran out.

Even in the "cool" phase of the mission the WISE mission couldn't detect 50K Kuiper Belt objects. Neptune (75K average would have barely been detectable.

The NEOWISE mission used radiative cooling to cool the detector to 75K by staring into deep space. But the 12 and 22 μm detectors (which have to be much colder) were toast.

Further there is a tradeoff between aperture, field of view, and focal length. Focal length (enlargement) comes at the expense of field of view. WISE is afocal which in my limited understanding mean they are just detecting energy in the exposure and not trying to form an image. Perhaps someone more familiar with telescopes can explain afocal.

The WISE had a 47 arcmin field of view from a 40 cm telescope that took 8.8 second exposures with 10% overlap.

The GTC for comparison is a 10.4 meter telescope that has a 12.5 arcsec field of view (although there are a number of instruments attached with wider field of view and the telescope has a number of foci).

So a WISE type telescope with long term cryogenic cooling (8K) could effectively find all objects inside the orbit of Uranus. The collector size (aperture) would control the minimum object size detected. Larger is better.

Last edited:

Filakius

Adept

Yes.., it would be like pointing a scope back at Earth and seeing the planet and moon.., but not our cars, planes, rocket ships or satellites. For a spaceship to be detected.., it would need to be much closer to the telescope than the target planet.So a WISE type telescope with long term cryogenic cooling (8K) could effectively find all objects inside the orbit of Uranus. The collector size (aperture) would control the minimum object size detected. Larger is better.

This would be like holding a sheet of newspaper in front of your face.., and waiting for me to tell you to look for a high altitude 747 through a tiny pin hole.

Or strapping very powerful binoculars to your head.., and telling you to walk around and look at specific objects.

Filakius

Adept

For "tracking apps" like FlightRadar24 and for real life pilots and Air Traffic Controllers.., when an aircraft is flying out of coverage Flightradar24 keeps estimating the position of the aircraft for up to 2 hours if the destination of the flight is known. For aircraft without known destination, position is estimated for up to 10 minutes. The position is calculated based on a height and speed given by ATC the pilot must maintain.., and in most cases it's quite accurate, but for long flights the position can in worst cases be up to about 100 km (55 miles) off.

PRIMARY RADAR

ATC Primary radar (no transponder) is not as good as we assume. Planes lose contact when flying over oceans, remote areas, and deserts.., and revert to procedural controls. The average distance is 160 - 200NM before radar loses an object.

Most primary radars (non transponder) used for long range surveillance in ATC are operating in the L-Band..., signals which do not follow the curvature of the earth very well; At low altitude the range is limited because the aircraft are below the horizon. Radar is also shielded by terrain; in mountainous areas it is difficult to cover the valleys. In Europe, and I believe also in the US, high altitudes are well covered by primary radar, at low altitude there are many gaps.

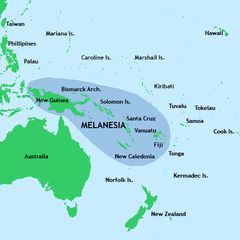

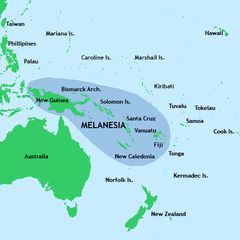

Air Traffic Contol (ATC) radar locations Australia. Each only reaching approx 160NM each

Locations of U.S. operational weather and air traffic control radars.

When the screens go dark, ATC breaks out the flight progress strips (and possibly the shrimp boats or other airplane-substitutes to lay on a map & push around) and uses brain power to substitute for the computer and radar. To facilitate separation in a non-radar environment the controllers are going to chuck you (and every other IFR flight) on airways, at standard IFR altitudes, quite probably with a specified airspeed, and they're going to route you navaid-to-navaid. (IFR Instrument flying rules)

By ensuring all flights are on known airways, at known altitudes, and at known speeds controllers have a good idea of who is where in their airspace. They will supplement this mental picture by asking pilots to report crossing certain fixes (intersections/radials), so that they know exactly where that flight is when they make their report (which will help them account for winds aloft, and give them an idea of what the flight's ground speed is in addition to the airspeed they're asking them to fly).

ATC aircraft separation is built upon the assumption of no radar by way position reports. At compulsory reporting points.

All of the above provides IFR to IFR separation, which is what the controller is concerned with. IFR-to-VFR (Visual Flying Rules) separation, which is normally supplemented by radar, becomes the responsibility of the pilots ("See and Avoid")

MILITARY

Some NAVY ships have radars on board.., but are generally not much more powerful than 250NM. They simply stay close enough in order to form a perimeter / fence. Here is an example from Japan's military radar defence.

A lower band (HF - UHF) radar can be used to look over the horizon (OTH radar). This type of radars is used for Air Defence purposes. The resolution is very poor compared to radars used for ATC but it serves well as an early warning system for incoming enemy aircraft and missiles. This type of radar requires huge antenna arrays, over a kilometre long and they consume enormous amounts of power. The effective range is typically about 3000 km. We have one in Australia that points towards Asia called the Jindalee Operational Radar Network.

Last edited:

CasualBystander

Celestial

Depends on how warm the spacecraft is.Yes.., it would be like pointing a scope back at Earth and seeing the planet and moon.., but not our cars, planes, rocket ships or satellites. For a spaceship to be detected.., it would need to be much closer to the telescope than the target planet.

This would be like holding a sheet of newspaper in front of your face.., and waiting for me to tell you to look for a high altitude 747 through a tiny pin hole.

Or strapping very powerful binoculars to your head.., and telling you to walk around and look at specific objects.

Don't know how much "focus" there is to WISE type of instruments. The cells of the detection array will record a heat signature at some coordinates and a narrow field of view telescope could be used to identify it.

CasualBystander

Celestial

For "tracking apps" like FlightRadar24 and for real life pilots and Air Traffic Controllers.., when an aircraft is flying out of coverage Flightradar24 keeps estimating the position of the aircraft for up to 2 hours if the destination of the flight is known. For aircraft without known destination, position is estimated for up to 10 minutes. The position is calculated based on a height and speed given by ATC the pilot must maintain.., and in most cases it's quite accurate, but for long flights the position can in worst cases be up to about 100 km (55 miles) off.

PRIMARY RADAR

ATC Primary radar (no transponder) is not as good as we assume. Planes lose contact when flying over oceans, remote areas, and deserts.., and revert to procedural controls. The average distance is 160 - 200NM before radar loses an object.

Most primary radars (non transponder) used for long range surveillance in ATC are operating in the L-Band..., signals which do not follow the curvature of the earth very well; At low altitude the range is limited because the aircraft are below the horizon. Radar is also shielded by terrain; in mountainous areas it is difficult to cover the valleys. In Europe, and I believe also in the US, high altitudes are well covered by primary radar, at low altitude there are many gaps.

Air Traffic Contol (ATC) radar locations Australia. Each only reaching approx 160NM each

Locations of U.S. operational weather and air traffic control radars.

When the screens go dark, ATC breaks out the flight progress strips (and possibly the shrimp boats or other airplane-substitutes to lay on a map & push around) and uses brain power to substitute for the computer and radar. To facilitate separation in a non-radar environment the controllers are going to chuck you (and every other IFR flight) on airways, at standard IFR altitudes, quite probably with a specified airspeed, and they're going to route you navaid-to-navaid. (IFR Instrument flying rules)

By ensuring all flights are on known airways, at known altitudes, and at known speeds controllers have a good idea of who is where in their airspace. They will supplement this mental picture by asking pilots to report crossing certain fixes (intersections/radials), so that they know exactly where that flight is when they make their report (which will help them account for winds aloft, and give them an idea of what the flight's ground speed is in addition to the airspeed they're asking them to fly).

ATC aircraft separation is built upon the assumption of no radar by way position reports. At compulsory reporting points.

All of the above provides IFR to IFR separation, which is what the controller is concerned with. IFR-to-VFR (Visual Flying Rules) separation, which is normally supplemented by radar, becomes the responsibility of the pilots ("See and Avoid")

MILITARY

Some NAVY ships have radars on board.., but are generally not much more powerful than 250NM. They simply stay close enough in order to form a perimeter / fence. Here is an example from Japan's military radar defence.

A lower band (HF - UHF) radar can be used to look over the horizon (OTH radar). This type of radars is used for Air Defence purposes. The resolution is very poor compared to radars used for ATC but it serves well as an early warning system for incoming enemy aircraft and missiles. This type of radar requires huge antenna arrays, over a kilometre long and they consume enormous amounts of power. The effective range is typically about 3000 km. We have one in Australia that points towards Asia called the Jindalee Operational Radar Network.

Well...

All MH370 did was turn off its ATC transponder to disappear.

Most UFOs do not come equiped with an ATC tramsponder.

Most of its flight was not captured by any radar. If it was captured by satellite or over the horizon radar, a lot of governments hid that fact, causing 100s of millions of dollars to be wasted searching.

If a UFO penetrated DC airspace it would be identified.

Otherwise not so much.

Toroid

Founding Member

The planet is guarded by humans and aliens. In the Russian Alien Race Book it's claims Earth is/was guarded by a collective of races called The Council of 5 that were at one time known as The Council of 9. Apparently four races dropped out.Assuming an advanced ET race managed to send an vehicle to our planet.., would it be able to enter Earth's atmosphere without alerting everybody? Or do we humans have the skies monitored so well, this would be impossible.

www.youtube.com/watch?v=JV4ukcYF8n0

humanoidlord

ce3 researcher

nope they dont have heat finsDepends on how warm the spacecraft is.

Don't know how much "focus" there is to WISE type of instruments. The cells of the detection array will record a heat signature at some coordinates and a narrow field of view telescope could be used to identify it.

because they arent spaceships but solid "holograms", well not really its quite hard to explain